I'm working so hard, but can't seem to make a difference! I'm running at top speed, but don't seem to be getting anywhere! According to Keith Murnighan I might be trying to hard, doing too much. In fact, if we are going to accomplish bigger things, we may need to actually Do Nothing.

The premise of this easy read is that the skills and instincts that got us moved into newer, bigger roles will ultimately kill our chances of succeeding in them. I've seen this happen in my own career, and I've seen it in others' as well. I was once promoted to lead the team I had previously been a part of. How was I going to make this work? How could I earn their respect and trust as their leader? I chose to show them that I was still "one of them" - that no work was beneath me, that I would still work as hard as any of them. Isn't that "servant leadership" after all? But my team now needed something different. If I would provide the leadership they needed they could more than handle all of the projects, tasks and deadlines that came their way. I made a lot of mistakes as a new-minted leader, many of them rooted in my failure to change the way I thought and worked.

From the years of experience I have since had, I know Murnighan is right. To succeed - to give my company and clients the greatest benefit - I need to do less and less. This is still difficult for me - I still derive tremendous satisfaction from working in the trenches with a team to deliver a solution of some sort. But Covenant needs lots of good Project Managers and Analysts, not just one, and they're not going to materialize if I am focusing only on my own stuff.

I still need to work on solution delivery, but I also need to help Covenant achieve "leverage" - ("a high ratio of juniors to seniors" - see Managing the Professional Services Firm, David Maister.) Few organizations can afford to have only senior-level employees: instead they must focus on developing new talent that can do much of the work, and do it at much earlier stages of their career.

Contrary to his book's title Murnighan is not advocating laziness, rather that we work very hard on different things. When we work on the right stuff we will make a difference, and we will get somewhere - bringing others along with us as we go.

The rest of the book seemed more like a potpourri of advice for leaders - some of it very good - and all of it connected in some way to the theme of doing nothing.

Alan W Richardson on Project Management

Wednesday, August 5, 2015

Friday, January 16, 2015

Closing a Project

Ending a project cleanly is easier said that done. People are rolling off the project and getting pulled into the next thing... frankly, we all just want to move on. So how do we make sure that this important part of every project gets the attention it needs?At Covenant our projects are approached in phases. We always start with a planning phase and we always end with a "Transition" phase. (What happens in between depends on Agile vs. Waterfall.) The Transition phase begins when the solution is turned over to the client for testing and validation. It ends when the client accepts the solution and starts using it.

Part of the problem is that we often don't clearly define what needs to be done. Put together a budget summary? Meet with the client? Sure, that makes sense... but there is much more. In fact, I've been focusing on this aspect of our own methodology lately, defining what a project audit should look like at the close of a project, and I was surprised at how many things needed to get done.

Below is my scorecard checklist - take a look and let me know if a) That is helpful to you, and b) If you do anything that I haven't covered.

Schedule

|

Did we finish within the approved schedule.

|

Budget

|

Did we finish within the approved budget.

|

Change Requests

|

All changes in scope, schedule, and budget are documented, approved and online.

|

Requirements

|

Finalized and uploaded

|

Technical Documents

|

Finalized and uploaded

|

Technical Audit

|

Has the architecture and technical design been finalized and reviewed?

|

Client Budget Summary

|

Summary sent to client showing original budget, revised budget (listing CR’s) actual cost.

|

Client Project Close Meeting

|

Review deliverables from SOW/Charter.

Confirmed acceptance from Client.

|

Return Client Property

|

Return badges, confidential documents etc. to client

|

Conduct Lessons Learned

|

(This will be a topic for another day.)

|

Archive Project

|

We use Project Server for everything - the project is moved out of Project Server and into a SharePoint archive.

|

Provide Feedback

|

Written performance feedback on all team members sent to employees and managers.

|

Notify Client Manager

|

Our Client Managers work on surveys, white papers etc.

|

Turnover to Support

| |

Project Summary

|

Sent to executives etc.

|

Team Recognition

|

Team celebration, individual recognition

|

Thursday, April 24, 2014

1 Week Sprints

What to do? Typically, I revert to waterfall for projects this short: a single, long Sprint.

However, that assumes you have buttoned-down design up front. In this case - developing an intranet - we don't have that. The design of the web site will be heavily influenced by feedback throughout the development process.

So we are going to 1 week Sprints, with only 1 Developer and 1 Designer. This will give the Customer more than 4 reviews during which feedback can be provided.

We are just starting Sprint 3 and here's what I've learned so far:

- Yikes - this is fast! It forces a daily deployment cadence - not something we're used to. We can't wait until Day 3 to start testing - the Developer won't get the defects until the Sprint is almost shot. Daily deployment is an adjustment for us - I'm curious to see how it goes.

- My Sprint burndown! - my beautiful Sprint burndown! It doesn't ever really burndown any more. We have stories that have tasks for both the Designer and the Developer. The Designer has to get done first. If he starts his tasks on day 4 of the Sprint, the Developer doesn't stand a chance of getting his part done in the current Sprint. This means that a number of User Stories are left incomplete at the end of the Sprint. That's not a problem, because we simply push them forward into the new Sprint. First Sprint you do this in your velocity takes a hit, but then it washes out going forward. But the Sprint burndown doesn't reach zero hours any more. Oh well, at least the Release Burndown still makes sense.

- Customer visibility = check. The Customer is seeing things much more often - and this is a good thing. In fact, it is the point of the adjustment to 1 week Sprints.

- Constant Pressure. There is no slack time in the Sprint. It's not the same as a death march, which typically implies working nights and weekends, with quality being jettisoned. Rather, there is simply no time to lose, no time to waste. This maximizes personal accountability for everyone involved - even the Project Manager! The finish line is in full sight the moment the Sprint starts.

Here are a couple of good, quick reads on the subject:

- Choosing the right Sprint length

- Choosing a Sprint length - Shorter Trumps longer (with helpful diagram)

Thursday, April 17, 2014

Sprints - How few is too few?

I believe firmly that there are a minimum number of Sprints necessary for Agile to work

That's right, any less than 4 Sprints and you won't get the Customer feedback you need. Think about this:

So that's 2 touchpoints for the Customer. That is barely useful - you run the risk of the Customer saying at the end of Sprint 3: "Wait a minute! You're telling me that we only have 1 Sprint left and it's almost full already? - I barely got to provide any input! This product isn't even close to being ready."

You are now in a sticky situation.

Even with 4 Sprints.

So what do you do if the project won't sustain 4 or more Sprints?

Have you had to deal with adapting Agile to smaller projects like this? How have you handled it?

That's right, any less than 4 Sprints and you won't get the Customer feedback you need. Think about this:

- Sprint 1 Review: Typically this is a barebones presentation. The product (website etc.) doesn't look like much yet, and the Customer generally says, "Great - glad to know we're progressing."

- Sprint 2 Review: Okay, now there's something to see and the feedback starts coming in.

- Sprint 3 Review: More to see = more feedback.

- Sprint 4 Review: You're done. UAT ("hardening") may follow and will produce feedback, but it should be tweaking the product, not continuing to design it.

You are now in a sticky situation.

Even with 4 Sprints.

So what do you do if the project won't sustain 4 or more Sprints?

- Reduce the Sprint duration. E.g. from 2 weeks to 1 week. That will allow you to double the number of Sprints without affecting budget or schedule.

- Don't use Sprints. The project may be short enough that you can do all of the design up front and just have a single development/test cycle. In other words, don't use Scrum.

Have you had to deal with adapting Agile to smaller projects like this? How have you handled it?

Wednesday, October 16, 2013

Passing the PMI-ACP (Agile Certified Practitioner)

Why I Took It

I've been using Agile approaches for the past couple of years and knew that there was much I hadn't learned yet. I wanted to broaden my understanding and have a way of easily communicating to folks that I have this skill. I saw 3 options:| Compensated Affiliate |

- CSM (Certified Scrum Master). This is the most popular industry-recognized credential. It is also the easiest to obtain. Unfortunately, that's also it's downside: if you have 21 PDUs and can pass the exam, you're a CSM. Even Agile Purists don't like the fact that folks with no required experience can call themselves "Master".

- CSP (Certified Scrum Professional). The creators of CSM - Scrum Alliance - more recently came out with the CSP. (CSM is a prerequisite for CSP.) It addresses the shortfall of CSM by requiring prior experience - at least 2000 hours over the past 2 years.

- ACP (Agile Certified Practitioner). This is PMI's Agile offering, which is interesting as they have long held that the PMBoK/PMP covered everything including Agile. The recommnded reading list doesn't even include PMBoK (although it's assumed you already know it well.) I'm glad they have something specifically for Agile, because it IS different. There, I said it. It one-ups CSP in that you have to maintain your certification with ongoing PDU's.

- The ACP exam - and therefore preparation materials - cover more than just Scrum. I like Scrum - it's the only framework I've used, but it's not the only one out there. I wanted to better understand XP, DSDM, Kanban, Crystal, Lean etc. The ongoing PDU requirement gives it more credibility than CSP. You simply can't sit on your laurels in this business.

- PMI is the most widely recognized authority for Project Management. I'm a consultant and the credentials matter. (If you're not a PM, CSP may be a stronger option.)

- I already have my PMP which takes care of some of the prerequisites. I would have had to get the CSM before I could start towards the CSP.

How I Passed It

I scored the highest rating in each knowledge area. (Better than I did on the PMP!)PMI recommends 12 books on a variety of Agile topics - some of which I have previously read. However, I didn't want to purchase and read all of these books right now, so I kept looking for help. Here's what I went with...

Agile PrepCast - more information (compensated affiliate)

I listened to an episode of Andy Kaufman's terrific "People and Projects Podcast" in which he interviewed Cornelius Fichtner of "PM Podcast" fame. The subject was the PMI-ACP and Cornelius was promoting his Agile PrepCast. I followed up and learned that I could get all the required PDU's via podcasts on my iPhone. I had already calculated the cost of out-of-town Scrum learning and this came in at 5% of that. I also much prefer learning at my own pace. I was sold!!Exam Prep Book by Andy Crowe

Andy's PMP exam prep book was excellent and so this was a no-brainer for me when it came out. It is brief and covers only the essentials. It will help you pass the exam, but it is too shallow to be a real Agile learning tool. That's a perfect compliment to the PrepCast which went into much more detail. It comes with 2 practice exams, which I thought lined up well with the actual exam.Agile Books and Practice

I have read a few good books on Agile over the past couple of years, most importantly Mike Cohn's Agile Estimating and Planning. This is focused on Scrum methods, but it's a great place to start for Agile newbies - and a solid reference for more experienced folks. Other good books: User Stories Applied (Cohn), Project Management the Agile Way (Goodpasture), Crystal Clear (Cockburn), and Lean Software Development (Poppendieck.)It certainly didn't hurt that I had run several significant Agile projects over the last couple of years. Much of the learning served to formalize and crystalize the knowledge already in my head.

Let me know if this information has been helpful to you - and I wish any of you seeking certification the greatest success!

Thursday, August 29, 2013

When Is A Requirement Not A Requirement?

Agile is supposed to provide a certain amount of elasticity in a project. Scope isn't a prison cell, but an uncharted country to be explored and defined as opportunities and obstacles present themselves. Okay, perhaps that's a little romantic, but the idea is that scope can morph as things progress. Change is good, not bad. Some Agile projects nevertheless end up with heavy scope-related tension. Agile promised the business that they could have whatever they wanted within the given time and budget, as long as they engaged in continuous value-based prioritization. "If you want to put more water in the bucket, you have to empty some out first." This quickly becomes a problem, however, when nothing in the bucket is expendable. "We've had a great idea, but can't afford to take anything out to make room for it."

Hello tension.

The project manager has three bad answers to choose from:

- "That's phase 2!"

- "That's a change request!"

- "We'll squeeze it in somehow!"

What went wrong? Agile was supposed to allow for flexibility in scope - it's the one point on the iron triangle (budget-schedule-scope) that was not fixed.

The problem may well be with the water in the bucket. If all the water is needed - if all scope is considered necessary - then you really have no room for flexibility. The budget and schedule may have been carefully and accurately planned to account for the amount of scope in play, but all of the requirements are required.

Enter MoSCoW analysis. It stands for:

- Must have

- Should have

- Could have

- Won't have

This is an old method for categorizing requirements. We all typically do this at some point - evaluating what simply has to be done, then focusing our attention on those things. Often, everything else is deferred, having been deemed unimportant.

Agile can make excellent use of MoSCoW, although in a different way.

In the planning phase of your Agile project, help the business see the requirements through the MoSCoW filter. If everything looks like a Must Have you have a problem: these requirements are required... they aren't negotiable, and when you need scope flexibility later on, it won't be there.

If everything is falling into the "Must Have" bucket, the business folks probably aren't thinking hard enough. Even if all of those requirements are critical, there are always other requirements that are less so - dig a little deeper. In the rare case where this isn't so, scope is fixed and Agile is probably not the right answer.

So how many requirements can be required? A rule of thumb is 60%. The business expects to get 60% of the project scope delivered - anything less is failure. They've made commitments and they expect you to do the same with these requirements. The other 40% is malleable. That's your scope flexibility. When something new comes up midstream, one of these Should Have or Could Have requirements can be taken out to make room for it. Or, perhaps de-prioritized... moved below the line. (The line may move as things take more or less time than expected to complete, perhaps bringing that requirement back into the project.)

Remember that not all requirements are created equal. Some are not required. Be clear up front with the business about this, and work with them to find what aren't Must Haves. This is essential to maintaining scope flexibility, which is essential to Agile.

Friday, March 29, 2013

Burndown Charts 2: "Detailed Burndown"

So we've looked at a Simple Burndown Chart as a way of representing progress on an Agile project, now we'll look at one that presents a little more detail. As a reminder, there is no one-size-fits all approach to release burndowns: you have to tinker with it to suit your audience and the type of work.

I am leading development teams for Covenant Technology Partners, a consulting firm that heavily leverages Microsoft technologies. My clients are typically new to Agile, and most have never seen a burndown chart before. So I want to keep it simple, showing schedule, scope, and budget in a way that flows with our 2 week sprints. I report on other things, such as progress against themes, and issues/risks, but I'll discuss those another day.

The key difference between the charts below and the simple charts in the first post is that these separate the impact of velocity from scope changes. I can now see how many points the team is burning in each sprint, unclouded by variability in scope.

The blue bars represent the amount of work remaining on the project. Everything above the zero point line is what remains of work added at the start of the project. Everything below the line represents what remains of work added after the start.

The advantage of this over the simple charts is that I can see how the team is performing - every point delivered comes off the top of the chart. Now I can draw a straight (blue) line and get a better idea of velocity.

As stories are added to the project, the bars extended beneath zero. Of course, they can also shrink as stories are removed from scope. I've added a green trend line for scope growth - I'm using a log (curve) not linear (straight) line because I anticipate that most new scope will get added earlier in the project.

What matters most now is not when the blue line crosses zero, but when it intersects with the red dotted line - that is when all stories will be complete.

The drawback of this chart is that the negative growth is unintuitive for many, requiring additional explanation. Also, it seems that I need to add extra sprints in order to show the intersection line (assuming more scope is being added than will fit, which is typical). The problem is that my budget only covers 7 Sprints, but the chart now implies 8.

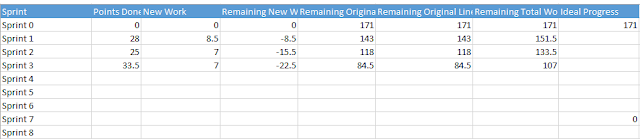

Here's the data behind the chart.

This chart seems to work best for me. Again, velocity is clear and so is the total scope. In this scenario, the project has 7 sprints and there will be about 30 points left in the backlog at the end of Sprint 7. Here is the source data.

The problem with burndown charts that show scope growth is that they tend to imply that new scope is "extra" - and therefore, bad. Scope creep. When presenting this chart to the business, we have to take care to avoid communicating this. Instead we should state clearly that the only reason for separating out original vs. new scope is that it allows us to track team performance.

What the business wants in the backlog is entirely up to them. They just have to square it with their budget - which in an Agile project equals schedule. In the above example, 30 points will not make it into the project. This is not a bad or unusual thing: as a project moves along new value is envisioned and added in. The key is for the business to prioritize the backlog (in collaboration with the project team), so that the 30 points represent the least important user stories.

I am leading development teams for Covenant Technology Partners, a consulting firm that heavily leverages Microsoft technologies. My clients are typically new to Agile, and most have never seen a burndown chart before. So I want to keep it simple, showing schedule, scope, and budget in a way that flows with our 2 week sprints. I report on other things, such as progress against themes, and issues/risks, but I'll discuss those another day.

The key difference between the charts below and the simple charts in the first post is that these separate the impact of velocity from scope changes. I can now see how many points the team is burning in each sprint, unclouded by variability in scope.

Burndown Showing Scope As Negative

The blue bars represent the amount of work remaining on the project. Everything above the zero point line is what remains of work added at the start of the project. Everything below the line represents what remains of work added after the start.

The advantage of this over the simple charts is that I can see how the team is performing - every point delivered comes off the top of the chart. Now I can draw a straight (blue) line and get a better idea of velocity.

As stories are added to the project, the bars extended beneath zero. Of course, they can also shrink as stories are removed from scope. I've added a green trend line for scope growth - I'm using a log (curve) not linear (straight) line because I anticipate that most new scope will get added earlier in the project.

What matters most now is not when the blue line crosses zero, but when it intersects with the red dotted line - that is when all stories will be complete.

The drawback of this chart is that the negative growth is unintuitive for many, requiring additional explanation. Also, it seems that I need to add extra sprints in order to show the intersection line (assuming more scope is being added than will fit, which is typical). The problem is that my budget only covers 7 Sprints, but the chart now implies 8.

Here's the data behind the chart.

Burndown Showing Scope as Positive

Scope Creep?

Unlike waterfall projects, scope on an Agile project is intentionally fluid. We don't lock the business into a predefined list of deliverables, but instead allow - even encourage - them to modify scope along the way. That means that original vs. new scope is largely irrelevant. (Shocking, I know.)The problem with burndown charts that show scope growth is that they tend to imply that new scope is "extra" - and therefore, bad. Scope creep. When presenting this chart to the business, we have to take care to avoid communicating this. Instead we should state clearly that the only reason for separating out original vs. new scope is that it allows us to track team performance.

What the business wants in the backlog is entirely up to them. They just have to square it with their budget - which in an Agile project equals schedule. In the above example, 30 points will not make it into the project. This is not a bad or unusual thing: as a project moves along new value is envisioned and added in. The key is for the business to prioritize the backlog (in collaboration with the project team), so that the 30 points represent the least important user stories.

Beware Certainty

Don't read too much into the lines. This is Agile, and averages can be misleading. If a team completes 20 points in Sprint 1 and 40 points in Sprint 2, the velocity is not necessary 30 points. It is likely between 20 and 40, and that means the "line" could cross the zero axis in a couple of different places. I.e. It might take 5 sprints, or it might take 7 sprints. Save yourself some headaches and be sure to communicate this to your client. Velocity will become clearer and the ranges more narrow as the project progresses, but it will never be fully understood until the project is complete!

Subscribe to:

Comments (Atom)